- Human in the Loop

- Posts

- #25 Edition: Back From India - The Momentum Is Undeniable

#25 Edition: Back From India - The Momentum Is Undeniable

PLUS: Shopping research in ChatGPT and a packed week of new model drops

Hey, it’s Andreas.

Welcome back to Human in the Loop - your field guide to the latest in AI agents, emerging workflows, and how to stay ahead of what’s here today and what’s coming next.

Last week’s edition didn’t send correctly, so apologies. I just spent a week in India, and I’ll share a few impressions and observations on AI in India in today’s edition.

Here’s what we additionally cover this week:

→ Anthropic introduces Opus 4.5

→ OpenAI brings shopping research to ChatGPT

→ Alibaba’s Qwen wins NeurIPS Best Paper for a gated attention breakthrough

→ And much more.

Let’s dive in.

Weekly Field Notes

🧰 Industry Updates

New drops: Tools, frameworks & infra for AI agents

🌀 United States launches “Genesis Mission” for AI-driven science

→ President Trump signs an Executive Order to accelerate AI-powered scientific discovery using federal research labs, compute, and national datasets.

🌀 Anthropic introduces Opus 4.5

→ Claude Opus 4.5 tops SWE coding benchmarks, outperforming Gemini 3 on multi-step reasoning and tool-use workflows.

🌀 Black Forest Labs drops Flux.2 image models

→ New multi-reference system that preserves character and style across up to ten input images, with lower costs than competing models.

🌀 Microsoft releases Fara-7B for visual control

→ Open 7B model built for computer control and GUI navigation.

🌀 DeepSeek Math V2 adds new model with self-auditing

→ Chinese AI lab DeepSeek has introduced DeepSeekMath-V2, a new model for solving math problems that achieves high performance standards.

🌀 OpenAI discloses Mixpanel security incident

→ An attacker accessed and exported some API users’ profile info from Mixpanel. No chat data, API keys, payment data, or credentials were affected.

🌀 OpenAI brings shopping research to ChatGPT

→ New GPT-5 mini powered feature that scans the internet and generates personalized buyer’s guides.

🌀 Perplexity adds persistent memory

→ Assistants now retain long-term context for personalized workflows.

🌀 Cohere partners with SAP to bring sovereign agentic AI to EU

→ Enterprise-grade, compliance-first deployment for regulated industries.

🌀 Suno and Warner Music create opt-in AI licensing

→ Settlement forms a framework for legal AI music training.

🎓 Learning & Upskilling

Sharpen your edge - top free courses this week

📘 AWS re:Invent 2025 opens free virtual access (1 - 5 December)

→ The Super Bowl of cloud computing is offering free online access to keynotes, technical sessions, and workshops throughout this week.

📘 Redis with a new DeepLearning.ai course on semantic caching

→ Showing how semantic caching can significantly cut LLM latency and compute cost.

📘 Stanford’s one-hour crash course on agentic AI

→ Free and sharp overview of how agents work: LMs, prompts, RAG, tool use, and multi-agent planning. If you watch one AI lecture this week, choose this.

🌱 Mind Fuel

Strategic reads, enterprise POVs and research

🔹 Harvard’s PopEVE on rare disease mutation detection

→ A newly released AI model called PopEVE can predict how likely each variant in a patient’s genome is to cause disease. The team is currently testing PopEVE in clinical settings.

🔹 Ilya Sutskever on AI’s new phase

→ In a conversation with Dwarkesh Patel, he says AI is shifting into a fundamentally different era with faster capability jumps and new safety challenges.

🔹 NVIDIA’s ToolOrchestra shows orchestration > scaling

→ An 8B coordinator beats larger models via tool routing and planning. Clear trend: architecture and orchestration now matter more than size.

🔹 BCG releases enterprise agent guide

→ Strong focus on KPIs, failure modes, and Agent Design Cards. BCG shares experience from key lessons learned (“the hard way”) for successful agent builds.

🔹 WEF governance framework on agentic AI

→ 82% of executives expect agent adoption. WEF outlines oversight, liability, and control patterns.

🔹 MIT Iceberg Index on workforce exposure

→ $1.2T in US labor tasks exposed to AI. Clear map of where displacement and augmentation will hit first.

🔹 Alibaba’s Qwen wins NeurIPS Best Paper

→ Recognized for a gated attention breakthrough that could reshape future model architectures.

🔹 Anthropic on real-world productivity gains

→ Internal study shows Claude cuts 90-minute tasks by 80%, worth $55 in labor savings.

♾️ Thought Loop

What I've been thinking, building, circling this week

I spent last week in our IBM office in Kolkata, and it was a reminder of something I’ve seen for a decade: the depth of talent in India is not an abstract idea - it’s real, it’s broad, and it’s accelerating. Meeting the next generation - people who grew up learning with LLMs and ChatGPT instead of textbooks alone - feels like seeing the future arrive early. Their confidence, pace, and curiosity hit different.

Team moment from our Ethnic Cultural Day celebration

I’m convinced India will play a defining role in the next chapter of AI. No question.

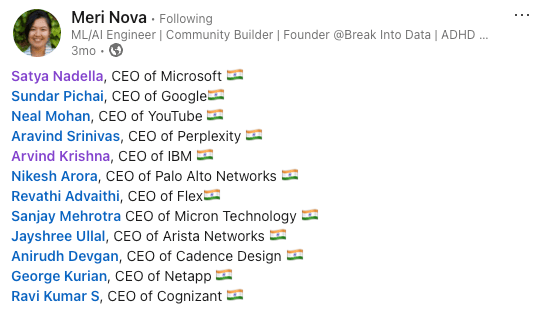

And yet, the paradox remains: India is the world’s largest exporter of tech talent — but one of the smallest inventors of AI. Just look at the global leadership table: Microsoft, Google, YouTube, IBM, Palo Alto, Flex, Micron, Arista, NetApp, Cognizant — all led by Indian-origin CEOs.

India is not short on skill or scale. What’s missing is the translation of that capacity into homegrown invention.

For decades, India’s IT companies thrived on services, not invention. R&D spend sits at just 0.65% of GDP (vs. 2.7% in China, 3.5% in the U.S.). Venture funding paints an even starker picture: in 2024, Indian AI startups raised $780M, compared with $97B in the U.S. and $51B in Europe. Add to that the challenge of 22 official languages and 19,500 dialects — many without clean digital datasets — and India looked structurally disadvantaged in the foundation model race.

But recent developments — especially DeepSeek’s sudden rise in China — have changed the equation. Practically overnight, New Delhi shifted gears:

$1.25B IndiaAI Mission → to fund sovereign models and national AI infrastructure.

19,000 GPUs pooled (13,000 H100s included) → through Jio, Yotta, AWS partners, Tata and others.

Six sovereign models funded → including Sarvam AI’s 70B multilingual LLM with reasoning + voice.

Startup wave → Soket AI Labs (120B params), Gan.ai (70B), Gnani.ai (14B voice), and CoRover.ai’s BharatGPT (3.2B multimodal, now shipping a “Mini” at 534M).

However, the technical barriers are real:

Sparse data → Many Indian languages have little digital text or consistent spelling.

Hard tokenization → Scripts like Hindi, Kannada, Tamil often lack spaces, making word boundaries invisible to global LLM tokenizers.

Capital gap → India’s startups run on tiny budgets compared to their Western or Chinese peers.

And yet, Indian builders are innovating under constraints. Techniques like “balanced tokenization” and voice-first design are producing efficient multilingual models tuned for India’s reality - and potentially for the Global South at large.

Talent leverage → Millions of engineers already fluent in global AI. Infrastructure keeps them at home.

Language moat → Cracking multilingual, voice-first AI creates defensible IP.

Cost edge → Data centers in India cost ~50% less to build vs. U.S. or Europe.

I’m convinced that if India executes, it won’t just catch up - not with its sheer scale and world-class talent behind it. Whether this shift happens tomorrow or over the next few years is uncertain - but it’s worth keeping a close eye on how Indian companies, startups, and policymakers are moving. What they do, and how they do it, could set the standard for sovereign AI done differently: efficient, multilingual, and built for billions outside the Western mainstream.

🔧 Tool Spotlight

A tool I'm testing and watching closely this week

LLM Council is a small but extremely useful repo from Andrej Karpathy that lets you ask one question and get answers from multiple AI models at once. Each model then reviews the others, and a “chairman” model produces one final, improved answer.

The benefit is simple: you don’t need to ask every model individually. Instead, you bring them together into your own LLM Council - whether that’s GPT 5.1, Gemini 3.0 Pro, Claude Sonnet 4.5, Grok 4, or any other model you rely on. It saves time, improves reliability, and gives you a clearer sense of how different models think.

→ Try it here: LLM Council

That’s it for today. Thanks for reading.

Enjoy this newsletter? Please forward to a friend.

See you next week, and have an epic week ahead,

- Andreas

P.S. I read every reply - if there’s something you want me to cover or share your thoughts on, just let me know!

How did you like today's edition? |