- Human in the Loop

- Posts

- #14 Edition: Two ChatGPT switches that set you apart from 90% of users

#14 Edition: Two ChatGPT switches that set you apart from 90% of users

PLUS: OpenAI starts building chips and Lovable introduces voice mode

Hey, it’s Andreas.

Welcome back to Human in the Loop — your field guide to the latest in AI agents, emerging workflows, and how to stay ahead of what’s here today and what’s coming next.

This week:

OpenAI bets on custom AI chips and launches an AI-native job platform

Anthropic raises $13B while paying a $1.5B settlement to authors — a first on training data

And UNESCO drops its global report on AI in education, where I was honored to contribute a think piece

And in today’s deep dive: two simple ChatGPT switches that set you apart from 90% of users.

Let’s dive in.

Weekly Field Notes

🧰 Industry Updates

New drops: Tools, frameworks & infra for AI agents

🌀 OpenAI to produce custom AI chips with Broadcom

→ Reported $10B deal to mass-produce chips starting next year. Built to double compute for GPT-5, ease GPU shortages, and reduce reliance on Nvidia — putting OpenAI alongside Google, Amazon, and Meta in the custom silicon race.

🌀 OpenAI launches Jobs & AI Certifications

→ Platform to connect future workforce with AI-native roles and credentials. Could reshape the hiring funnel. A serious competitor to LinkedIn?

🌀 OpenAI adds Branch Conversations

→ Lets users explore parallel chat paths. A simple but powerful UX feature for reasoning and brainstorming (according to OpenAI this was one of the most requested new features).

🌀 Anthropic raises $13B Series F

→ Valued at $183B. Puts them firmly in the same capital league as OpenAI and Google.

🌀 Anthropic agrees to $1.5B author settlement

→ First major payout for AI training data misuse. Covers ~500K pirated books at $3K each, forces deletion of shadow library files, and sets precedent: piracy = violation, purchased books = fair use.

🌀 Google launches EmbeddingGemma

→ Lightweight, on-device embedding model built for speed and privacy. Positions Google to compete in the small-model race where efficiency beats scale.

🌀 Atlassian acquires The Browser Company (Arc/Dia) for $610M

→ Bet on AI-first browsers as the future of work. Browsers become operating systems for agents (I wrote about this several times here).

🌀 Visa unlocks MCP Server + Agent Toolkit

→ Speeds up AI-driven payments. A key step for agentic commerce infrastructure.

🌀 Mistral’s Le Chat adds MCP connectors + Memories

→ Stronger workflow automation, signaling a move toward agent orchestration.

🌀 Lovable introduces Voice Mode

→ Build full-stack apps entirely by voice. Pushes vibe coding into new terrain.

🎓 Learning & Upskilling

Sharpen your edge - top free courses this week

📘 UC Berkley Agentic AI MOOC (Fall 2025)

→ This is your chance to learn Agentic AI from a top tier US university, FREE. UC Berkeley’s created an MOOC (Massive Open Online Course) on agent frameworks, reasoning, planning, and applications. Guest speakers include leaders from OpenAI, NVIDIA, Google, Microsoft, and DeepMind. Lectures run weekly (Sep 15–Dec 1).

📘 Stanford puts its AI/ML curriculum online [Free]

→ Seven legendary courses, all taught by Stanford professors and free to access. If you master even half, you’ll know more than 90% of people who just “use AI.”

📘 Perplexity gives students free access to Comet AI browser & study tools

→ Hands-on entry point for learners, marrying research tools with AI-native UX.

📘 Google Official Nano Banana 48h Global Hackathon

→ Build with Gemini 2.5 Flash Image (aka Nano Banana) on Sept 6–7. $400K in prizes, free-tier access (100 generations/day), and all projects submitted via Kaggle. Judged on innovation, technical execution, impact, and presentation.

🌱 Mind Fuel

Strategic reads, enterprise POVs and research

🔹 UNESCO on AI & the Future of Education

→ 160+ page global report unveiled at Digital Learning Week in Paris. Covers AI futures, powers and perils, pedagogies, teacher roles, governance, inequalities, and policy. Core message: AI in education must amplify teachers, respect human learning, and be governed with care and inclusion. I was honored to contribute a think piece to this edition.

🔹 Google’s 2025 ROI Report

→ Google just surveyed 3,466 enterprise leaders on AI. The positive news: 74% of businesses see AI pay off in year one. Strong validation that the AI wave isn’t just hype.

🔹 OpenAI on why chatbots hallucinate

→ New research argues models guess because training rewards confidence over admitting uncertainty. OAI proposes penalizing confident errors to shift behavior — a path to systems that know their limits.

🔹 AWS on Agentic AI

→ New executive guide frames agentic AI as a structural shift, not just another automation wave. Covers what agents are, how they differ from software, why outcomes matter, and a leadership playbook.

♾️ Thought Loop

What I've been thinking, building, circling this week

There’s a lot of attention on autonomous agents and end-to-end workflows right now. But in day-to-day work, I find that a few simple features inside ChatGPT already deliver outsized impact — if you set them up properly.

What still surprises me: when I talk to colleagues, friends, or read replies to this newsletter, I see many professionals still using ChatGPT in a very basic way — almost like a Google replacement. Prompting skills are improving, yes. But there is so much more leverage from the customized setup that makes ChatGPT relevant to your specific role and workflow. It only takes a few minutes to set up, but it feels heavily underused.

We’re here to change that. This week, I want to highlight two areas:

→ Connectors

→ Custom Instructions

Connectors: linking ChatGPT with your tools

ChatGPT introduced Connectors a few weeks go for services like Gmail, Calendar, and Drive. It hasn’t received much attention yet, but the function is straightforward: instead of copy-pasting, ChatGPT can now work directly with your information.

Claude offered something similar through MCP, but that setup was clunky, not integrated, and too complex for non-technical users. With ChatGPT’s native Connectors, the setup is simple. Nothing fancy — but the impact is significant. The model can finally operate on your real data, not just what you manually feed it.

I’ve tested Connectors over the past few weeks. The benefit isn’t advanced automation — it’s the removal of friction. And the good part: setup is straightforward — open Settings in the bottom left of ChatGPT, enable Connectors, and choose the services you want to link.

I’ve connected Gmail, Google Calendar, and Drive — and now ChatGPT can:

Skim unread emails and give me a summary

Summarize threads + draft replies

Pull key info from old conversations

Prep meetings + agendas in seconds

Do research in data history across Drive + threads if it’s documented there

It’s not magic — but if you spend hours in your inbox, this saves real time.

[ ⚠️ Quick reminder that enabling connectors gives ChatGPT view access to your private data. I'm an AI power user and it's part of my job to test everything, so personally, I make the sacrifice for the extra features. But please be careful if your job is data-sensitive. ⚠️ ]

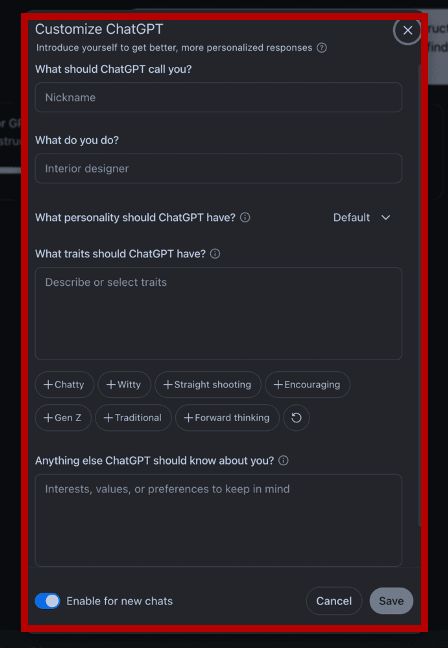

Why Custom Instructions Are So Important

Let’s move to the second feature: Custom Instructions.

Every time you open ChatGPT, you’re starting from a blank slate.

Yes, there’s memory — but it’s limited. By default, ChatGPT doesn’t know:

Who you are

What you do

How you prefer to communicate

The kind of work you’re focused on

That means you waste time re-explaining context. Or worse - ChatGPT “wings it” and gives generic answers.

Custom Instructions fix this.

They let you set permanent guidance once, so ChatGPT always knows your role, style, and preferences. These instructions are injected into every conversation, making responses more relevant, consistent, and aligned with your goals.

The result:

Less friction — no need to repeat yourself

Higher quality output — answers tailored to your work and audience

Compounding ROI — one-time setup, lifetime of better results

Think of Custom Instructions as your personal operating system for AI.

Set them up once, and every interaction becomes sharper, faster, and more useful.

Custom Instructions Template (Role-Based)

I use the following structure in my own work and recommend it in professional settings — it makes ChatGPT far more relevant and consistent (just copy it):

What do you do?

I am a [Job Title/Role] working in [Industry/Domain]. My focus is [brief description of responsibilities, e.g., leading digital transformation projects, writing thought leadership, managing product launches, etc.]. My target audience includes [internal stakeholders, executives, clients, students, etc.].

What traits should ChatGPT have?

Ask up to 3 clarifying questions if my request is ambiguous; otherwise skip and proceed.

Always outline a 3-bullet “Approach” before the full answer.

Be concise, structured, and data-driven. No hype, no unnecessary jargon.

Use clear H2 headings, bullets, and short paragraphs for skimmability.

Define acronyms on first use.

End every response with:

Assumptions: bulleted list of assumptions made.

Next steps: 2–3 concrete suggestions.

What I need from you: 1–2 targeted questions to refine the next answer.

Suggested next step: 1 concrete action I can take right away.

If I say “coach me”, switch to coaching mode: ask one workflow/goals question at a time, then suggest 2 obvious + 2 creative AI applications.

Anything else ChatGPT should know about you?

Role & audience: I’m a [role] writing for [target audience].

Voice: concise, direct, data-driven.

Constraints: Aim for ≤ [N] words unless I specify otherwise.

When citing facts or stats, include 1–2 trustworthy sources.

Review and adjust output tone as if it’s going directly to [audience type: executives, students, technical peers].

🔧 Tool Spotlight

A tool I'm testing and watching closely this week

Most LLMs choke when you try to feed them raw codebases. They weren’t designed for 10k-file repos with complex structures.

Gitingest fixes that.

It takes any GitHub repo and turns it into a prompt-friendly text digest — clean, structured, and ready for context injection.

How it works:

→ Replace hub with ingest in any GitHub URL (github.com/... → gitingest.com/...)

→ Get a digest: repo tree, file contents, token stats

→ Works with public and private repos (with a token)

→ Use it via browser extension, CLI, or Python package

Why it matters:

Fast onboarding → Drop a repo into ChatGPT/Claude without manual copy-paste

Better prompting → Optimized formatting for LLMs (tree + content + token counts)

Flexible setup → Use it as a package, a CLI, or even self-host with Docker

Try it now:

→ Explore Gitingest on GitHub

That’s it for today. Thanks for reading.

Enjoy this newsletter? Please forward to a friend.

See you next week and have an epic week ahead,

— Andreas

P.S. I read every reply — if there’s something you want me to cover or share your thoughts on, just let me know!

How did you like today's edition? |